Monday, October 4, 2010

MSI issues with SQL Server 2005 and Vista

As a matter of fact, there are numerous KB's and newsgroups and web gripes about this issue, dating back to the pre-XP world. The "nice" thing, as with most things MS, is that not one of these fixes works for ALL issues of this type. My particular issue recently was the uninstallation of SQL Server 2005 (Standard and Express, 32-bit and 64-bit) from two Vista Pro 64-bit machines.

So, I first did what any self-educated genius would do.

From the command line I ran:

c:\windows\system32\msiexec.exe /unregister

c:\windows\system32\msiexec.exe /regserver

c:\windows\sysWOW64\msiexec.exe /unregister

c:\windows\sysWOW64\msiexec.exe /regserver

...thinking this would fix my issue. However those nagging errors kept popping up. Oh yeah, did I forget to mention the errors I was recieving? I recieved to MSI errors: 1719 and 1603. Looking this up will produce the numerous KBs, newsgroup postings, etc. that I alluded to above. Additionally, I recieved an error message indicating that the Windows Installer Service could not be initiated due to system permissions and to contact the system administrators and to...blah blah blah...

So what fixed it, some might be asking:

Downloading the MSI Clean up utility from MS (MSICUU2.exe) and running this on the boxes in question. However, for some unkown and yet distinctively MS-type behavior, running the MSICUU2.exe from the GUI (right-click->run as administrator) did not work.

What did work was using the command line to access the MSICUU2.exe application via the command line AND using the runas command.

So, Vista again dissappoints and makes me wish EVERYTHING was *nix. It is dissappointing that the GUI option to run as an administrator has seperate permissions than the command line option to run as an administrator. You just "have" to love Windows. :-(

Sunday, October 3, 2010

Skype shot my CSS in IE8!!!(???)

What I did: Tested both pages in Firefox and IE8. Both pages would initially load correctly. The initial "index.html" worked as advertised in both browsers: the viewer was able to switch repeatedly between CSS's through the use of some simple Javascript buttons. However, the second page (stored in the same parent directory and using the EXACT same relative paths) would NOT use the first stylesheet in IE8 after the initial page load. It would toggle between stylesheets, but when stylesheet 1 was selected, the viewer was presented with default, left-align, text.

What I have:

- Skype

- Windows 7 Professional

- IE8

- Firefox 3.6.10

Debugging/Troubleshooting:

There were NO issues with FireFox, so I was automatically inclined to believe that the failure lied with Microsoft. I re-wrote the webpage at least six times (not that fun) and re-wrote the CSS sheets at least three. Additionally, all were validated multiple times through the W3C tools (XHTML and CSS). However, NOTHING seemed to work.

The Lightbuld:

I decided that maybe I should try to isolate from outside my current box. My wife's computer is exactly the same, with the exception of not having Skype installed (I try to avoid having Skype as much as possible, which is a WHOLE different topic). On her laptop the webpages BOTH acted correctly between the two stylesheets, on both IE8 and FireFox. I did run the IE8 Developer Tools (F12) in attempt to identify the issue, but that was no help. It was when I looked at the plug-ins that I noticed a difference:

It MUST be the Skype plug-in.

Now What:

All I can deduce at this point is that the Skype Add-in for IE8 (the one that allows you call-by-click from numbers on website) is the culprit. However, I have NOT been able to identify why. I do seriously wonder if this is an indication of how IE8 renders XHTML pages when the Skype plug-in is installed, and, MORE IMPORTANTLY, if this is not an indication of a bug in skype that could indicate a vulnerability between IE8, Skype, and parsing. I haven't had a lot of time to research this, so at this point it is purely conjecture based upon what I have seen. However, I do have a friend who had the EXACT problem and indications that Skype is the culprit.

Yet One MORE reason NOT to like Skype!

Friday, September 10, 2010

Quick and Dirty on vSphere 4

1) Installing ESX servers and using the vSphere vCenter Server management client. The major release that I have been working with is 4. Sooooo, I would assume that any minor relase would work with any other minor release. But NOOOOOO, leave it to VMware to over-complicate everything. Lesson here:

- if you have more than one ESX server and are using the vCenter Server, here is the scoop: Remote management from the client is easier when all ESX servers are the same build. Additionally, the management of the ESX servers through the vCenter Server will not work if the vCenter server is 4.0 and one or more of your ESX servers are 4.1.x. If you have this setup, you will most likely end up with an error along the lines of "vmodl.fault.HostCommunication"...although I admit I don't recall the exact error string. There are two fixes that I found for this. The first one involves updating the vCenter Server....but this must be on a 64-bit machine as the update is not supported on the 32-bit machine. The second fix is that of downgrading the ESX server to the 4.0 build. The downgrade wasn't bad AND more importantly, the downgrade (or upgrade) allows you to preserve your data store.

2) View Connection Server: If the machine you are trying to serve up shows "no desktop source available," there are a couple of possilbe issues here.

- You may not have the agent installed in the virtual machine

- The name you have used for your machine may not match the name on your client settings if you specified a particular desktop for one user

- Sometimes this error has shown from me when there has been a DNS issue on the domain.

3) Cloning/migrating:

If you have to clone or migrate a virtual box and will be serving it up via the View Connection Server, it proved neccessary for me to run the View Connection Agent installer again and select "Repair." Although I used customization settings to ensure differing hostnames after a clone, I still found that the View Manager Server would NOT see the machine until I "repaired" the installation.

Friday, June 25, 2010

Windows 7 and VMWare

The biggest issue that I had with creating the virtual Windows 7 boxes (AND Windows Server 2008 R2) was that I was getting this error message:

"A required CD/DVD drive device driver is missing. If you have a driver floppy disk, DC, DVD, or USB flash drive, please insert it now."

Before explaining the fix that I "found" for this error, I should probably include the other hardware and software I am working with for this little project:

- 1 ESX Server installed on a Penguin server

- 1 Penguin Desktop - Vista

- 2 Windows 2003 R2 Servers (one for the VMWare vSphere/vCenter Server, one for the VMWare Connection Server

- 1 IGEL Thin Client with both RDP (5 and 6.1) support and VMWare View Client installed.

- ALL VMWare software is at least release 4.0.0.

- Guests (Virtual) OS's

- XP 32-Bit

- XP 64-Bit

- Vista 32-Bit

- Vista 64-Bit

- Windows 7 32-Bit

- Windows 7 64-Bit

- Red Hat Enterprise Linux 5.1 (HAD to have at least one Linux box. :-) )

So, with all of that, I am in the process of testing different functionalities. The desire is to have a set of virtual workstations that I can push to the thin clients and that support streaming video, bi-directional audio, memory-intensive applications, and all of this with as minimal network impact as possible. I will discuss the overall evaluation results later in this post, or at a later date. :-)

Now back to the Windows 7 installation problem. The error message that I recieved:

"A required CD/DVD drive device driver is missing. If you have a driver floppy disk, DC, DVD, or USB flash drive, please insert it now."

is an artifact of the type of CD/DVD drives and drivers that Windows 7 recognizes during install. This message will actually appear after files have started copying to the local hard drive. That said, this message can be returned to a user under more than one situation, making it a pain to troubleshoot.

If you research this error message, a lot of what you will find is that people automatically assume that it is a bad ISO file. While this could be true, this was not my problem as I was using a disk, not an ISO. However, it may be good to list some of the reasons for this message, and how I determined that they did or did not apply. If you are reading this and just looking for the fix...scroll down further. :-)

Some Causes of the Error Message:

1) Trying to install from a corrupt ISO file.

- This did not apply to me as I was loading from a DVD. However, I will acknowledge that I re-downloaded the ISO and re-burned the DVD a second time just to rule out a bad ISO, and number 2 below.

- If you are using an ISO, there are some things you can do to verify that it is comoplete and not corrupt: Check the Hash value of the ISO, Check the size of the ISO, and do this against the listed Hash and Size values from your download site.

2) A Faulty DVD.

- While this was possible in my case, I ruled it out (See number 1 above).

3) A bad CD/DVD Driver on the host system.

- One would think that this would be the biggest culprit, given the error message. However, the biggest solution found on the net is number 1 above.

- This was in part my problem. When I installed the ESX Server, I didn't give much thought to drivers for hardware as I really didn't know at the time how far I was going with the server itself. Bottom line here is that I need to find a Windows 7 "approved" CD/DVD Driver and install this on the ESX Server. As a side note here, the ESX Server runs on a Linux Kernel (the exact version escapes me right now).

4) A bad CD/DVD Driver on the virtual system.

- This was in fact the other part of my problem. Contrary to popular belief, VMWare vSphere 4.0 is not "perfectly" compatible with Windows 7. In fact, as I may post later, there are some other issues that arose during this evaluation that were specifically related to the lack of complete OS support for Windows 7.

So, HOW did I fix this problem and create some virtual Windows 7 workstations????

I went the LONG way, on purpose (I'll explain in a bit).

THE FIX:

On a seperate machine on my network, I had already had a small virtual network setup inside a VMWare Server. Using this setup, and an ISO of Windows 7 Ultimate 32-Bit, I created two working virtual machines running Windows 7. Once I had created these two, it was a somewhat simple matter of getting the virtual "machine" onto the ESX server.

There are multiple options for transfering a virtual machine to an ESX server.

One option is to FTP directly to the ESX server and upload the virtual machine, and then run the VMWare converter tool. This is not the option that I chose. The ESX server does not come with FTP enabled by default and I did not feel like enabling FTP nor doing some of the things required for security compliance. For those curious, there is an RPM from VMWare for FTP, "vsftp," that can be used.

The method I chose took only slightly more work than FTP'ing directly to the ESX server. However, it was clean and worked. What I did was transfered the virtual machine folder (YES, the WHOLE FOLDER) to the Windows Server 2003 that contained my vCenter Server. After doing this, and I wish I had some screen shots, I logged into the vCenter Server itself using the vSphere Client. Once logged into the vCenter Server, I was able to browse the datastore that exists on the ESX server. Browsing the ESX Datastore through the vCenter server allows for the uploading of a file OR Folder. I obviously chose the folder. After the upload was complete, I browsed into the folder I just uploaded and selected the ".vmx" file. Right-clicking on this file will show the option to "Import this machine." After some conversion time, VOILA, my ESX Server now contained a working virtual machine using Windows 7 Ultimate.

I realize that this might not be the most optimum fix for long term issues requiring Windows 7 or Server 2008 R2. However, it was more important to prove that there was a method, other than FTP, that would allow me to create a virtual machine outside of the ESX Server, and them move it to the ESX Server.

Sunday, April 25, 2010

XML Parsing and SOAP messages

When a Web Service is created in Visual Studio, the service can be launched in a browser window. This page will show you the service available as well as a link to the WSDL document, and comments on the namespace if it is set to the default URL of tempuri.org. The screenshot below shows an example of this page.

Clicking on a Service name will allow you test the functionality of the service. Here is where an important note is annotated. When testing the operation directly, the parameters used are sent using HTTP POST. However, when accessing the Web Service through another application/service, the parameters are passed using SOAP messages by default.

So why is this important you might ask? Something gets lost in translation with the differences between HTTP POST and SOAP messages. When attempting read an attribute from an XML element, one can use the XMLReader.GetAttribute() function. This function is overloaded to allow for example: an int parameter (the index value of the attribute) or a string value of the attribute's XName. So, when parsing an XML document, one way to do this (using Visual Studio 2008, C#) is with something similar to the following:

using (XmlReader reader = XmlReader.Create(url))

{

reader.Read();

reader.ReadToFollowing("DestinationInteraction");

reader.MoveToFirstAttribute();

StartOp = reader.GetAttribute("href");

reader.Close();

}

This block of code will create an XML reader object (reader) and will then move to first Element with a name value of "DestinationInteraction." The reader is then moved to the first attribute of the Element and then assigns to (string)StartOp the value of the "href" attribute. An example of the portion of the WSCL document that this is reading is:

Notice that this Element has no values, only an attribute of "href" that has the value "OPName." And this is where the fun begins.

Parsing the value of Elements in an XML document is generally very easy. A developer can code such that only the required Element(s) is accessed (reducing overhead), or can parse the entire document, storing needed values for the application. It's the parsing of attributes, specifically using an "int" as the parameter that presents a problem.

The bottom line is this:

When using HTTP POST the XMLReader.GetAttribute(int i) will work properly. However, when attempting to use the int parameter in a SOAP message, this failed for me every time, with either an out-of-bounds error message (attributes can be accessed like an array [0...n]) or an invalid parameter error message. Needless to say that this was very frustrating for me, especially since I spent a LOT of time on this only to realize that the easy answer was to use the string parameter.

Using XMLReader.GetAttribute(string s) function allows the operation to execute properly (as long as s is a valid XName of the attribute) in both HTTP POST actions and with SOAP messages.

Unfortunately, this was another thing on which I spent a LOT of time that I really didn't have. I was not able to determine the cause of the problem, although it is obviously something in the way the SOAP messages are being handled. I assume it's the way that the client side is parsing the SOAP message and believe that although the SOAP message passes the int parameter as an int, the client-side is most likely parsing the int and casting it to a string. I have finals this week and will be focused on that in my free time. However, as SOON as my finals are over, I plan to spend some time on this one as I really want to know what the failure mechanism was with this operation.

Making a Change and Who You Know

The back story:

When I was leaving the Army, I was contacted by a woman who works for the Georgia Department of Labor, Veterans Assistance. I was this woman's first client, and she really went the distance to help me find suitable employment in order to care for my family. It was obviously a stressful time (leaving the Army was medically forced due to a stupid surgeon, not by choice) and this woman really helped, and probably more than she even realizes. So with all of that said, I have still stayed in contact with her and have tried to pass on job information to her so that she may help other service members being medically discharged.

The email:

The woman I reference sent me an email asking if I was happy at my current job and if she could pass along my information to someone. Although I have been extrememly happy with my current job, I felt that out of respect for her, I would at least listen to what this other company was offering. This is where the "who you know" comes into play, in addition to being willing to take a chance.

The outcome:

I am starting a new job in a week. This new job is programming based, as opposed to network security. I am very, very excited about this new job as I have been wanting to be able to focus more on programming than just the occasional tool I build or the programs I write for school projects. Furthermore, since I AM working on my Master's in Software Engineering (from the University of Michigan!!!), I think that having more real-world experience is important and gives credence to my pending degree. The real dilema here is that I really do love my current job. If they could come close to what the new company has offered, I would stay. However, I don't think that that will happen.

In any event, it is a win-win for me. While I will miss my current job and the fun I have there, I am certain the new job will be just as fun, even though I KNOW it will be a huge challenge with a LOT of pressure to perform quickly.

My two biggest passions when comes to work are network security and programming. Now I will be changing focus to the programming and I know that the challenges this presents will be fun, if not a little stressful. :-)

HTTP 404.8 ERROR

My current environment consists of:

- Windows 7 Pro

- Visual Studio 2008 Pro

- IIS 7.5

The HTTP 404.8 ERROR:

- Hidden Namespace. The requested URL is denied becuase the directory is hidden.

What does this mean???

This error is apparently returned when a directory is listed in the RequestFiltering/HiddenSegments of the applicationHost.config file for IIS. The fix for this is partially found at (http://support.microsoft.com/kb/942047). I say partially becuase it is still up to the user to determine which directory is the problem. However, I will still post the fix instructions here, with the caveat that this "might" not be the solution to everyone else's problem with this error.

Steps:

- Open Notepad as an Administrator (right click on Notepad, select "Run as administrator")

- Open the file named "%windir%\System32\inetsrv\config\applicationHost.config.

- Find the

- Here's the annoying part...at least for me.

- In the

The unanswered question here is: "What caused this error in the first place?" What was I doing when the error was returned? I was trying to use a Web Service instance in a project I was working on. This Web Service instance has been used in multiple projects and this 404.8 had never been returned before. In fact, when I recieved this error, I attemped to see if other Web Services I had recently created returned the same error when I tried to access them through my main application; the same error was in fact returned by all Web Services when accessed through the application, but not when I used the "View in browser" option for each service in VS2008. As frustrating as this was, I was under a serious time crunch to get the application working, and as such I have still not had time to properly research why this error started happening.

I do strongly believe the error to have been caused by some update to either Windows 7, IIS 7.5, or (even less likely) Visual Studio 2008. The update had to have been applied since the middle of February, as that is the last known time that the Web Service in question was successfully used. I may post a question to the forums on these, but it depends on time. Maybe somebody reading this can tell me why this error just "magically appeared" one day.

Friday, April 2, 2010

Chunky Data (HTTP Headers, Part Deux)

That all said, it is important sometimes to review the "basics." So, here is another bit of information on HTTP Header information.

Field: Transfer-Encoding ";" 1#transfer-coding

Where 1#transfer-coding is the token defining what transfer encoding is being used.

I have seen even the the best of analyst make mistakes on what "Transfer-Encoding:chunked" means, so here goes:

This type of Transfer-Encoding ("chunked") is a method of coding is used to transport HTTP messages. The RFC (link below) can be read for the full details, but the big thing that I see people getting confused about...it's those numbers that appear in the message stream:

HEADER

AAA

.......

......

.....

HEADER

BBB

......

....

..

0

Where AAA and BBB are (in HEX) giving the size of the chunked portion of the message. As in the picture below, a final chunk will (must) end with a zero.

Sometimes, when following a stream in Wireshark, these numbers will "appear" in the middle of the data like:

......

XXX

.....

0

Either way, this number is not part of the actual message. Chunked encoding does not encode the payload specifically, It is used, as the RFC states, to encode the full message.

What is the lesson here: be careful when analyzing HTTP stream data. Do not include the chunk size values as part of the actual message when you are trying to decode.

Friday, March 26, 2010

HTTP Headers Part I

What I plan to put here now is just some basic information involving HTTP Headers. Time permitting and memory working, I am going to try to go a little deeper and cover other important aspects of this traffic, using WireShark screen shots. I think I would eventually like to expand this to include other web traffic protocols such as HTTPS, SSL(TLS), DNS, etc.

For anyone who may not know:

HTTP stands for Hypertext Transfer Protocol. According to the RFC, this is an "application-level protocol for distributed, collaborative, hypermedia information systems." It has been around since at least 1990 and its current version is 1.1. HTTP 1.1 is defined rather extensively in 2616 [1] (so I have no plan to summarize this entire RFC, just hitting what I think are important or misunderstood parts of the header as related to intrusion analysis).

HTTP communication involves a client and a server. A server in this instance does not have to be an actual Web/Domain/Mail server, but is any system that will respond, typically on ports 80 or 8080, to HTTP requests. Likewise, the client is any system that can send HTTP requests and handle HTTP responses.

HEADERS:

There are three basic types of HTTP Headers: general-header, request-header, response-header, and entity-header

Fields:

Connection - This field specifies options for a particular connection that MUST NOT be passed further by proxies. This field should NOT include end-to-end fields (Cache-Control is a great example given in the RFC). End-to-end headers are those headers that are necessary for the client/server communication, or that specify client/server communication that would be useless in any other form. The reason that the Cache-Control is a great example is that this field tells the client handler how long to keep a page cached, if at all, in addition to a few other parameters that are optional. The client would have to communicate all the way back to the server for a refresh. In the same sense, the server writes down the Cache-Control header to the client, so having this data parsed and dropped by proxies would make the Cache-Control field useless. As another note on this field, and one that is covered by the RFC, the "close" option for this header is important in that it signals that the connection must/will be closed after response completion.

Content-Encoding - This represents what type of coding (compression) is used on the data being transferred. The most common token I have seen is the gzip token, which indicates a file compressed with GZIP. There are four definitely registered values for this field. There can be more used, even private schemes unknown to anyone but a bad actor, as this fields tokens are only encouraged ("SHOULD") to register with IANA.

Content-Type - This is one of my more favorite header fields. This field, normally, will tell you what type of data to expect in that portion of the traffic. For example: image/jpeg would indicate a jpeg (picture) file is in the same stream of traffic. This also means that the start of the data should contain one of the jpeg file headers, JFIF for example, and not a file header from a different type, such as MZ for a Windows executable.

Content-Location - Another interesting field. This typically states where the requested resource is located. For example, if a client is requesting a pdf file from server.com, this field may also contain the relative URI to the resource, in this case it could be: http://someserver.com/someFile/BadGuy/bad.pdf. The interesting thing here is that I have seen exploits that will drop a temporary pdf file on the client machine and then use subsequent traffic to call that file...and the Content-Location will have the location as a folder on the client machine (c:\temp\bad.pdf). Additionally, this can also be used for re-directs, which I have scene a LOT with fake anti-virus issues. A connection between your box and IPA 1 may exist, a script may run or a button clicked that initiates a GET request for the malware and the Content-Location field will have something other than the server that was initially connected to.

Referer - Yes...it's spelled incorrectly. Furthermore, it can be programmatically set to a bad URI. This field is used in a request-header to "document" the location of where the Request-URI came from. Basically, it's "give me this object" (Request-URI) that I found the address to at "this site." Clicking on a link on the MLB home page that leads to the main Detroit tigers page would contain the Request-URI of "www.detroittigers.com" and a Referer of "www.mlb.com." This field is used to create track-backs links. The security concern here that every analyst should be aware of is: this field is not always accurate and could have been programmatically changed. Because this field can be used to create a list for optimized caching, it can by programmatically changed in order to have the URI "refreshed" from a bad actor.

Accept-Language - This indicates that language that the client would like the requested resources to be formatted in. This is an Internationalization (I18N) comparability issue, but does produce something interesting for analyst. If I see that a requester's Accept-Language token is set to "en-ca" and I know that Canada always tries to infiltrate my network, I would be more inclined to include this traffic in deeper research, eh. If nothing else, it would allow me to select multiple items for aggregation prior to analysis.

References:

[1] http://www.w3.org/Protocols/rfc2616/rfc2616.html

Tuesday, March 23, 2010

Call-By-Value/Call-By-Reference

Now, tonight I really have no desire to compare languages and their particualar method(s) of parameter passing. What I plan to do here is extend an answer that I recently passed onto someone else. So what follows is just a 'copy and paste' of an email I sent earlier...so I hope I didn't mistype anything! :-)

--from email--

Call-by-value and call-by-reference can be a little confusing, especially when you consider that they are implemented differently in different languages. One note of caution before going further is that in some languages, passing a pointer is still "technically" a call-by-value parameter until you actually deference it in the function; likewise, passing of the actual address ( &addOfMyVar ) is the actual address until you reference it's value. So the caution here is that, when working with pointers, be careful to deference/reference as needed. Not taking care of this could/would produce undefined/undesired results or errors.

Generally, I can't think of a normal situation where you would explicitly return by reference. To export data from a subprogram/function/etc, you would normally do one of two things:

Assign a variable the value of the return:

- someVar = myFunction(int x, int y, int z);

of, you would pass-by-reference those variables that you need changed in the caller's scope to the submodule

- myFunction (int x as ref, int y as ref, int z as ref);

That all said:

Given the below example, the full output should be expected to be:

3 2 1 // first write of submodule

4 5 6 // second write of submodule

6 2 3 // final write of main, after submodule has returned.

Example:

Main

Declare X, Y, Z As Integer

Set X = 1

Set Y = 2

Set Z = 3

Call Display (Z, Y, X)

Write X + " " + Y + " " + Z End Program

Subprogram Display ( Integer Num1, Integer Num2, Integer Num3 As Ref)

Write Num1 + " " + Num2 + " " + Num3

Set Num1 = 4

Set Num2 = 5

Set Num3 = 6

Write Num1 + " " + Num2 + " " + Num3

End Subprogram

Output Explained:

The reason for this:

- 3 2 1 // line 1 of output

- You are calling Display(3, 2, 1)

- The subprogram will first display these values as they are passed.

- 3 and 2 are both copies, and that is what is being displayed, the local (scoped to the subprogram) copy of num1('Z') and num2('Y').

- 1 is being passed as a reference, so num3 will 'basically' be the same address location (and value, by defualt) of the variable passed...in this case num3 address == 'X' address. Changes to num3 will in actuality be changes to 'X'.

- 4 5 6 // line 2 of output

- You are still in Display(3,2,1)

- You have already displayed the initial values passed

- The subprogram changes the local values* of num1, num2, and num3 to 4, 5, 6 respectively.

- * in this case, num3 is in actuality 'X', so 'X' is what is being changed, both the local scope of the subprogram and in the scope of main.

- You display the local num1, num2, num3 (see comment above) and exit

- 6 2 3 // final ouput after subprogram returns

- At this point, the variables used in the subprogram are now out of scope

- Main is using the variables X, Y, and Z

- Becuase X was passed by reference to the subprogram, where it was changed, the local (actually global in this case) value for X will have been changed.

--end email--

Saturday, March 20, 2010

Moving Forward on Reversing (Certification)

So, how did I suprise myself? I was given a practice exam (by someone who had some faith in me) for the GIAC Reverse Engineering Malware certification, and I decided to take it last night while at work. I took the practice exam cold and with no notes intentionally in order to gage where I was at on the topics.

Results:

Unfortunately, I did fail the exam. A 70% is required and I recieved a ~69%. The areas that I messed up on all involved either specific debuggers or some command line tools. I took this test in a little over an hour.

Why am I happy about this? I haven't had any real time in quite a while to play with debuggers, so getting questions regarding those wrong was expected, and an easy fix! Some time spent with some different tools and some review of some notes...no Problem there! The command line tools issue is also an easy fix: I just need to go back to basics on some things and use all the command line tools I can, when I can so that I stay fresh on there availability and options.

Path Forward on Reversing: The important thing to me about taking this test is that I took it cold and quick, and only failed by one question. What this means is that with a little bit of review and practice on forgotten tools and techniques, I should be able to sit the GREM and pass with a very good score! The GREM, GCIH, and GSNA (or maybe CEH), and MCSE are the remaining certs that I want to get. I am not worried about the GCIH, and now the GREM seems like I will do very well also, so this should be a productive year for me as far as certifications!

Friday, March 19, 2010

A brand new OGRE

From www.ogre3d.org:

"We’re very pleased to announce that OGRE 1.7.0 (Cthugha) has now been released, this version is now considered to be our stable release branch."

If you develop ANYTHING for 3D applications and you haven't checked out OGRE (no pun inteded), I think you are seriously missing something.

I had a project last year that involved using OGRE to create a driving simulator that incorporated a collision-avoidance algorithm. The project was 80% of where I wanted it, I got a great grade on it, and plan to extend it. The continued support of OGRE, and its newest release mean that, after I finish this semester, it should be relatively painless to get back on this project. In short, I am just excited to find that OGRE3D is alive and well, and moving forward!

Tuesday, February 23, 2010

Love for the Wireshark

So, here I offer two quick tidbits:

A GREAT reference sheet (there is also a tcpdump sheet here as well):

http://www.packetlife.net/media/library/13/Wireshark_Display_Filters.pdf

An example of the simplicity of the power:

If I know that I want TCP streams 4, 9, 71, and 120, I can do so easily by:

- entering the filter:

tcp.stream eq 4 || tcp.stream eq 9 || tcp.stream eq 71 || tcp.stream eq 120

- applying said filter

- selecting "File" -> "Save As"

- in the bottom left of the 'Save As' Window will be a boxed in area. Select the "Displayed" radio button over the right column.

- Give your file a name

You now have a pcap file of just the tcp streams you want!

Combining the pcap files quickly

An updated to this post, based on the last comment that I had recieved:

I agree that Pcapjoiner and some other tools can do this quickly, as well as add some other functions.

I like the fact though that there are these tools built into Wireshark that allows for the quick combo of just a few targeted pcaps. Basically, a way to get to the down and dirty of analysis on one more connections.

This has given me a GREAT idea for two/three/four more blog posts that would love to do:

1) building my own interface to do these merges, and other massaging that might be helpful. I am already picturing a ton of ways to go with this...maybe best to keep it simple...but it could be a fun projec for myself...adding the ability to run som stats, filters, create some xml/xhtml/html output in addition to output usalbe by tcpdump, wireshark, and ngrep.

2) to play around with the options of mergecap from the command line and try to add some filters by piping to/from ngrep or tcpdump. I think this should work just fine, and would allow for a larger number of files to be processed easily by mergecap in the dump.

3) a perl script that I can just drag a group of files onto for the merge. Perl's CPAN modules provide some excellent support for network traffic

4) a perl script to strip out whatever I want from a fully captured session: the webpage, a pic, the VoIP call, etc. This one might be a little harder....but sounds REALLY fun to me.

dw

<

The other day I finally became fed up with the process of using the Wireshark GUI on Windows to combine more than two PCAP files. I think some folks I know would give me a "Gibbs' Slap" if they knew just how many times I used the GUI to combine 15+ captures. (If you don't know what a "Gibbs' slap" is, you REALLY need to start watching the original NCIS, and NO, not the lousy NCIS:Los Angeles)

Unless things have changed (and I admit to not recently trying), it is generally easy in *nix to pass/search a directory of *.pcap files to the Mergecap.exe util of the Wireshark release, combining ALL the PCAP files into a specified output file. However, and I know this is [NOT] a shock to most people, it is not always as easy to do this same thing on the windows command line (which I was stuck using for this). Of course, I have 20-30 more years before I am a cmd line ninja, so there may be a very easy way to do this...but I don't know it and my friend Google couldn't find it. This left me with a huge whole in my life as I REALLY wanted a better and FASTER way to do this.

Before beginning this walk down my pcap-crazy mental train track, just a quick recap of how to use mergecap.exe:

Usage: mergecap [options] -w

So if I just want to merge some pcap files from a desktop folder into a file called merged.pcap:

C:\Program Files\Wireshark\mergecap -w merged.pcap "c:\users\UnixUsersAreCooler\Desktop\Some Pcap Files\1.pcap" "c:\users\UnixUsersAreCooler\Desktop\Some Pcap Files\2.pcap" "c:\users\UnixUsersAreCooler\Desktop\Some Pcap Files\3.pcap"

This will combine the 1.pcap, 2.pcap, and 3.pcap files into the newly created merged.pcap. However, in case it went un-noticed, that is a LOT of typing to combine three files. Isn't there an easier way?

The Choices:

1) Write a GUI that let me quickly select multiple files, creates the command line string for mergecap with these files, and executes the command. Great! Except, do I really want to create a GUI to do this?

2) Write a command line program to parse a specified folder for all pcaps, create the command string, then execute.

3) Create a script or bat file to do what I want, when I want.

4) Give up and begin a life of cheap booze and cheaper women.

The answers:

1) Nope. Little bit to lazy to spend the time to create the GUI that will make me spend more time navigating directory structures and selecting n files.

2) Nope. Lazy...see number 1 above.

3) This sounds like the way to go.

4) Might work, but then wife and kids might become irritated with such a choice. Back to number 3.

So now that it is decided that I am lazy, and can't chase women or whiskey, it's on to the scripting. There are multiple options here as well, but I kept it simple, dug up some examples, tweaked them for me, and went back to watching Office Space.

The batch file:

combine.bat:

setlocal

set myfiles=

for %%f in (*.pcap) do set myfiles=!myfiles! %%f

Cmd /V:on /c "c:\Program Files\Wireshark\mergecap.exe" -w temp.pcap %myfiles%

What does this mean and where does it go? I created a folder on my desktop for the pcaps I want to merge; the bat file goes here. To run this, I could double-click the file, but I prefer to see it in action . With that in mind, I open up a command prompt in the folder where the bat file is stored, and then execute:

$>combine.bat

The important things I want to point out here is the "Cmd /V:on /c ...". What this does:

- CMD /V:on re-calls the cmd.exe from the system32 directory with the setting of the delaying environment variables (/V:on).

- /c means to "run the following command." In this situation, no environment variables for the PATH for mergecap exist, so I need to call it directly, passing the remainder of the string as the arguments.

- myFiles is an array of all .pcap files in the directory where the script resides. Without the "/V:on" option, only the last file name passed by the 'for' will be present when the command executes.

This entry took longer to create then the batch file, but I hope it helps some angry analyst somewhere.

Friday, February 19, 2010

Version Control and Visual Studio 2008

TortoiseHG (w/ Mercurial) is an open source, and excellent, VCS. The TortoiseHG portion is a GUI interface that is linked to the right-click menu on Windows during install. Mercurial is the command line portion. Mercurial repositories can be converterd to Subversion repositorites, and vice versa, are supported in multiple IDE's such as Eclipse and NetBeans, and is VERY easy to use. I will put a mini listing of common Mercurial commands at the end of this post.

Without further ado, let's get started!

First of all, you need to download to pieces of software:

VisualHG:

- http://visualhg.codeplex.com/

TortoiseHG:

- http://tortoisehg.bitbucket.org/

After downloading these items, install TortoiseHG first (do not restart after install, unless you REALLY, REALLY, want to) :-)

Next, install VisualHG. This is the piece that integrates with VS 2008. After this is installed, NOW restart your computer.

Once your computer has come back up and you have logged in with your complex password, it is time to go through the "grueling" (it really shouldn't take very long to do this) steps of adding the VCS to your VS 2008.

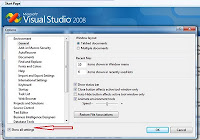

Open Open Visual Studio 2008.

->Tools->Options

- Select "Show all settings" (Screenshot below)

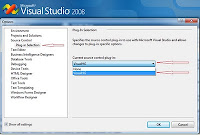

Select "Source Control" in the right-hand menu

- Select "Plug-in Selection"

Select drop down in left side

- Select VisualHG

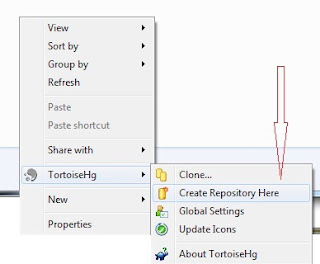

Before creating or using your repository, depending on how you installed, you may need to configure some settings, such as user ID. Configuration and Operation actions in TortoiseHG can be easily accessed from the right-hand GUI options (see screenshot below), or from the command line (again, this is really the Mercurial piece of the software). The command line options will open the respective GUI interface in most instances. I will be using the command line options in this manner.

If you used the most recent release, your username was created during install. If you don't need to create any other configurations, such as web shares, proxy, etc, skip to number 2.

1. To initially configure TortoiseHG:

- hgtk userconfig

2. Once this is done, a local repository needs to be created. This has to be created using TortoiseHG (VisualHG cannot create repositories.

- hgtk init [destination]

- The GUI will open a smaller window. In this window you can confirm or change the reposity location, allow for special files such as .hgignore, and make the repository compatible with older versions of Mercurial.

- I recommend using your current Visual Studio 2008 Projects folder becuase after creating the repository, a commit can be performed, causing everything to be controlled/monitored from that point forward.

- Once this repository has been created, the folder Icon will change to show a checkmark inside of a small green cirle. Initially, if you browse deeper into this folder, you will see the same checkmark in a green cirle in files and folders.

3. To test the version control system, make a simple change to any project file, and then navigate to that file.

- an exclamation mark should be visible on the lowest level file that you changed (and any resource files).

That's it. You should now have a local Source Code Version Control System for you Visual Studio 2008. There are ways to create your own using the VS2008 SDK, but I am not a person with the time to do so right now.

For general information on Mercurial command line options:

$ hg help or $ hg help [command] for more specific help

Generally, the Mercurial command line is intuitive for those who have dealt with VCS's before, and easy to learn for those who have not. Common 'keywords' for actions are the majority of the commands, with paramaters usually limited to comments, username, password, URL, etc. Some example commands:

- $ hg pull

- pulls from a repository

- $ hg commit

- commits changes to the current working directory/repository

- $ hg push

- pushes new files up to a repository

- $ hg status

- displays a status of the current repository (what changed)

- $ hg merge

- merge changes accross branch

- $ hg resolve

- attempt to re-merge unresolved files

- $ hg heads

- show branch heads

An excellent cheat sheet that will get you started with Mercurial commands is at the following link:

http://mercurial.selenic.com/wiki/QuickReferenceCardsAndCheatSheets?action=AttachFile&do=get&target=Mercurial-Cheatsheet-Adrian-v1.0.pdf

Wednesday, February 3, 2010

Windows Rocks???

I had played with the 7 beta when it was first made available, but only enough to do a clean install and look around the GUI. I had quickly decided it looked too much like Vista (which I don't mind so much now) and went back to other tasks. This may have been a mistake.

This last weekend, I decided to re-build one of my boxes in order to have a dedicated development and analysis box. My other dev environment had become too congested as I was admittedly lazy and used it for many other non-dev tasks.

I had a list of software I just had to have, for both personal preference and for school requirements. The major items were:

- SQL Server 2008

- Oracle 11g

- Visual Studio 2008 Pro

- Eclipse (with multiple platforms and tools)

- Android SDK

- NetBeans 6.8

- Visio 2007

- Cisco VPN client

- IIS 7

- UDDI SDK

- Tomcat 6

I was reluctant to slap all of this onto Windows 7 Pro. However, the box I was using was sold to me by Dell with Vista, and an AMD processor later found not to support Vista, and some of the drivers I needed for the box were not compatible with XP Pro. Thus, my decision to try to use Windows 7 Pro.

Windows 7 Pro installed clean AND faster than half of the software listed above. The total time to install 7, the software above, some minor other software, and ALL patches was about six hours (including beer breaks!). I was able to verify that IIS and Tomcat 6 are playing well together, I easily tested my dev tools by slapping together some quick code, and my DB's are accessible, secure, and working well!!!

Bottom line: as much as I love *nix, Windows 7 Pro (So far) ROCKS!!! The only pain I had was that I had to slap on an older .Net Framework in order to register the Microsoft.Uddi.dll.

Monday, January 18, 2010

Putting off the easy things

When I recieved this device, I was already happy with the Belkin router I was using at home and had not done enough with my server to really matter. In fact, with the exception of my wife's laptop and daughter's desktop, I have probably re-loaded all of my other computers at least three times since then. So, I really didn't see any justification for spending the time to move from the Belkin router to the SnapGear SG565. What a mistake!

Installing the SG565 was relatively simple and quick. The only issue that I had was that my cable modem required a hard restart. However, that was such a small thing compared to the immediate benefits! Unfortunately, I am fairly certain that McAfee has since discontinued this line since buying out Secure Computing.

Why am I am so happy with the SG565 (especially when I haven't even finished some of the finer set-up issues)? It really boils down to a list of features, and how easy they have been to set-up (or appear to be, in the case of those I need to finish).

These features:

-2 USB ports that I am now using for a shared printer and shared storage (negates some of the headache of a mixed OS home network).

-SNORT built-in

-ClamAV built-in

-What appears to be an excellent interface for firewall rules

-3G support (through a 3G wireless USB key)

-Stronger (but not too strong) wireless signal

In the short time I have been running the SG565, I have seen a definite improvement in network speed, as well as wireless connection states. With my Belkin, the connections were constantly having to be refreshed due to a weaker signal (over 30 feet, almost true Line Of Sight). Further, the adding of printers (to the Windows OS's so far) seemed to be easier than when I had it shared off of one of my desktops.

All in all, I am extremely happy that I FINALLY got around to setting this up. There are a few items on my to do list relating to this though: moving server to a DMZ, setting up SNORT, better F/W rules, etc. I am just glad to have such an awesome device...especially since I didn't have to pay for it at all! :-)

I am think I am going to start with the SNORT config on this. I am going to get another storage device first and setup a new db. I also want to look more into what version of SNORT this is, if it is upgradable, etc. The device appears to be able to accept syslog, other IDS, and other Firewall inputs, so I might end up not using the SNORT on the SG565, and just use the SG565 to aggregate and right down to the db. The firewall does provide a tcpdump feature to capture packets, and on-the-fly configs can be made to capture all traffic from a specific IP, MAC, rule, etc. Looks like I could really turn this into a huge project...time permitting, of course.

1 project down, 987 more for the year!

Saturday, January 16, 2010

Moving Forward

Some might ask: But isn't a global repository already available with sites such as wilw0rm.com, for example? Not to the level of detail I prefer, or think the security community could use. There are numerous organizations that focus solely on network security, or on attacking that security. Some of these are EmergingThreats, the MetaSploit project, SANS, etc. There are even numerous research groups (acadamia and business) that have their own internal data stores of vectors used. Then there are the legal and procedural requirements of governments and business that require the storage of data about attacks/compromises.

As an example: I was recenlty asked by another network security individual about some javascript that was extremely obfuscated. I was intrgued, worked on it for a bit, and then remembered that I know of some who have repositiores of obfuscated javascript. So I contacted them in hopes that they had already seen this particular vector. The result was that I didn't get help becuase the repository that these individuals had was/is controlled by an anti-virus vendor and the vendor was unwilling to share research results.

It would have been much more promising for the security industry if this other tech could have shared directly the code he found with those that controlled that particular repository. Maybe my buddy could've had an answer much quicker than what it ended up taking. However, and much more to the point, a globally available repository would have alleviated a lot of the headache for my buddy. It would relieve that headache for a lot of people.

What do I propose? I propose that a massive undertaking begin, where everyone pitches in, but I get all the credit. :-)

Seriously though: Why not take ALL the data each time your employer or home network is attacked, convert it to an XML file, and upload the data for everyone else to see (minus internal IP/connection data, user names, etc.). The logistics are harder than I think this sounds to most people, but this could be done. I can already hear some screaming, "But then even more 'would-be' attackers (read: fat, pimply-faced scriptkiddies) would be able to attack us." I answer with the belief that if we (the network security industry as a whole!) were to immediately publish this data for all to see, we reduce the attack landscape currently available to the bad guys. Signatures can be written, rules enabled, and the good guys score. The immediate down-side to this is that the bad guys would have to (and they WOULD) get smarter. However, if we had 10 times as many examples of a particular exploit, wouldn't signature AND behavior based detection be much more feasable, and effective? Of course, I could just be ranting and raving becuase it's a Saturday and college football is over! :-(

I am getting off of the soap-box in favor of doing some work and listening to one of my Prof's, and seeing if the Tiger's have made any more moves in preperation for Spring Training. :-)

Sunday, January 10, 2010

My Vista OGRE, Part III

In any event, I figured I would update (finalize, for now), my comments on using OGRE. The real bottom line on this project is that the requirements changing drastically coupled with only ~10 weeks to do this (in addition to work, my other graduate class, family, Michigan Football, and sleep) really was not enough time to do this properly. I guess I should add that trying to just "dive-in" into this was definitely the wrong approach. With this in mind, my summary here may be updated as I "remember" more of what I did to finish this project. It might be pertinent to add that I did finish the class with an "A," although I don't know my project grade. However, with the weight it carried, it had to have been at least a "B." :-)

If anyone is actually reading this blog and is interested, I did produce a write-up on the project, design decisions, and thoughts on how to make it better. Plus, I have the source code still. Can't ever seem to delete source code. :-)

FINISHED PROTOTYPE:

In the submitted prototype, I ended up using two different data structures (standard Linked List and standard deque) and a couple of algorithms used during each frame updates.

Entry, Exit, and Curve Vectors were all stored in a standard linked list (as IntersectionNodes). While I believe that there are other methods to account for these coordinates, and the actions that need to happen at each one in a scripted world of traffic, I decided that a linked list would be the easiest to maintain and use for these vectors.

The IntersectionNodes themselves stored not only the Vector3 types, but also Ints and Chars to indicate:

- The implicit direction of travel that a car must be facing.

- for example, Intersection 1 is a four-way stop. An entry Vector3 facing North would indicate a stopping point for the car facing North and an corresponding exit Vector3 with the car facing South.

- The number of the intersection.

The deque was used for scripting individual car routes (I have 5 vehicles that are mix of cars, trucks, and a school bus). Initially, it was a simple matter of adding each entry and exit point to the route deque's. During each frame update, the current position (Vector3) is compared to the list of Entry Vectors, which indicates a stopping point for the vehicle (curves were seperated from Entry and Exit Vector lists) and an algorithm for slowing the car was used (below). The hard part of the deque's (made hard by my own inability to just do some research first), and something I think I mentioned already, was calculating the turns through intersections and curves. The algorithms I attempted based on some math (sin, cosine, etc), never "quite" worked right. I ended up calculating manually a minumum number of points of a turn and adjusting the Vector3's x and z values. I added no less than seven Vector3's to between each Entry and Exit point on the deque's.

The stopping and restarting of the vehicles was fairly simple to transform into an algorithm to use during each frame update (I did not seperate this out into another class as I think it would have added more coupling than neccessary). Basically, what I did at each frame update was take the precent of change in distance to the stopping point (<=30 from Entry Vector3), and slowed the car by the same delta. To prevent any negative values in direction (makes the car go backwards), I tested for any distance <= 0.5. If distance was <= 0.5, the algorithm automatically set the wait timer to 30, distance to 0.0, and current position = destination (Entry Vector). This basically "snapped" a car to the stopping point and initiated the stop wait timer.

The Camera views, and toggling them, was a little difficult and time did not allow me to finish this aspect. The current prototype has one camera (movable) that overlooks the "city" I created.

Futre Plans/Increments:

As time permits, I would like to do more with this project, if only for myself. There is an OGRE physics engine that I have seen used for car movements and I would like to incoporate this. I also plan to revisit not only the multiple (toggling) cameras, but a more dynamic way to script out the movements of the cars that are not being "driven" by the user.

OGRE provides support for peripherals suchs as joysticks and I may be interested enough in the future to play with this for the driving simulator. However, right now, the actual "driving" of the car will actually be "camera view."

I also want to look at how storing Vector3's for a turn compares to a more dynamic approach of using a turning algorithm/function. I am curious as to the comparison of:

- storing and popping a turn algorithm as compared to a method that calculates the Vector3 of a set number of points on a turn. It is the memory used versus the processor time used that I am curious about.

The bottom line is that I really want to find some extra time to create the next increment on this prototype. I realized too late that there are some definite improvements I could implement to make this simulator: more realistic, more efficient, and more fun. The OGRE API, and the additional physics engine, definitely provide all the means to transform this prototype into what I want it to be. My middle daughter is only 8 years old, so I have at least seven years to perfect this program. :-)